Developing Methods for Recognizing Pain in Children Based on Facial Expressions and Bodily Sweat

In a recent study, researchers have found that combining facial activity and electrodermal activity (EDA) can significantly improve automated pain detection in children. This innovative approach leverages the complementary information provided by facial expressions, which offer direct behavioral indicators of pain, and EDA, which reflects autonomic nervous system arousal related to emotional and physiological states.

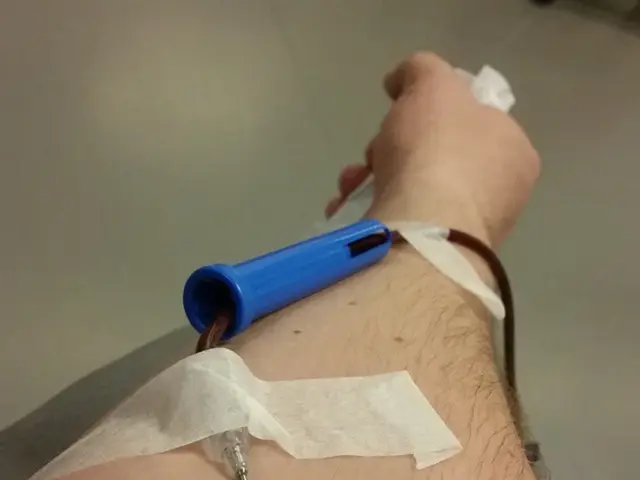

The use of EDA provides detailed, high-resolution physiological features such as tonic and phasic components, skin conductance responses (SCRs), and signal variability. These features correspond to autonomic arousal linked to pain. On the other hand, facial activity analysis captures subtle muscle movements and expressions indicative of pain intensity and affective response.

By fusing these modalities, automated systems can better differentiate pain-related signals from other emotions or states. This is particularly important in children who may have difficulty verbalizing pain.

In the context of domain adaptation, where models trained on one dataset or population must generalize well to another, combining facial and EDA data helps mitigate domain shifts. Domain adaptation techniques can align features extracted from facial expression analysis and EDA signals across domains, improving robustness and reducing overfitting to specific conditions or sensor setups. The physiological grounding of EDA also provides a stable, objective measure that enhances generalizability.

The study employed advanced preprocessing techniques such as Butterworth filtering and decomposition into tonic/phasic EDA components to extract meaningful features. Key EDA features included tonic mean/std, phasic SCR peaks amplitude/count, and autocorrelation for temporal patterns. Facial activity analysis involved detailed expression metrics related to pain, such as action units and muscles involved.

While the study does not report on specific child pain detection systems employing combined facial and EDA modalities, the described methods and feature extraction techniques from EDA, alongside facial expression analysis, provide a solid foundation for improving automated pain assessment in pediatric populations, especially when combined with domain adaptation frameworks.

However, the paper does not disclose the specific algorithms or techniques used for the fusion of models. Furthermore, it does not discuss the potential implications of the findings for pain management in children or indicate whether the fusion of models will be further developed or tested in future research.

Despite these limitations, the preliminary findings suggest that the fusion of models improves accuracy compared to using EDA and video features alone. This could potentially revolutionize the determination of pain levels in children, a challenge both for trained professionals and parents alike.

- The integration of eye tracking technology, particularly focused on analyzing facial activity and electrodermal activity (EDA), could be an interesting addition to health-and-wellness applications, providing invaluable insights into pain detection, especially in children.

- The development of artificial intelligence (AI) models, equipped with advanced features extraction from both EDA signals and facial expressions, may significantly contribute to the fitness-and-exercise industry by improving the assessment of physical exertion and mental health.

- The potential application of AI in artificially intelligent systems, combining EDA-based measurements with facial activity analysis, could push the boundaries of science, revolutionizing our understanding of pain and its management, as well as enhancing its interpretation for individuals across various domains, including healthcare professionals and parents.