Development of an Automated System for Identifying Pain in Children through Facial Expressions and Skin Conductance Analysis

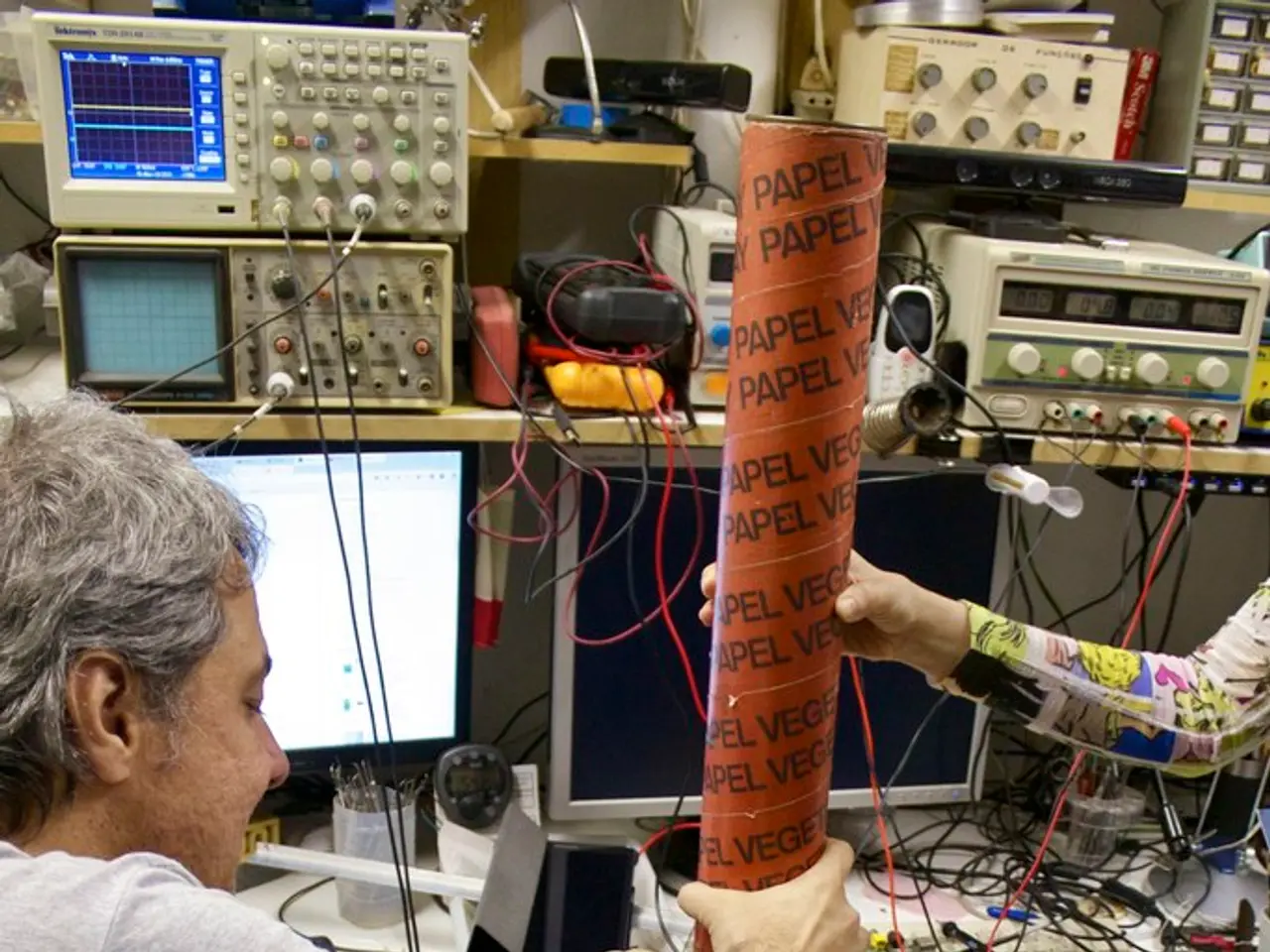

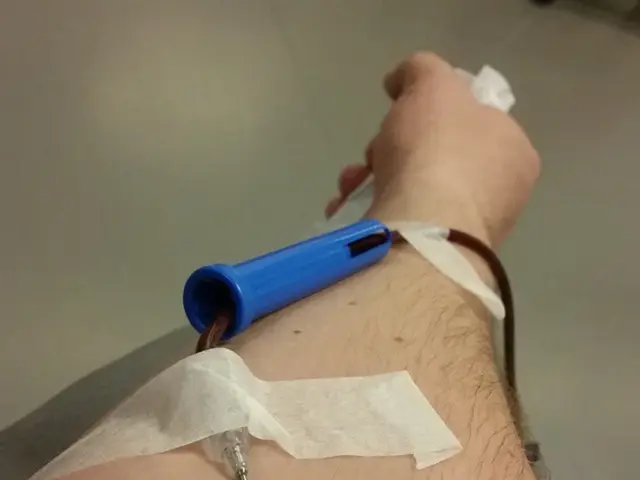

In a groundbreaking study, researchers are exploring the preliminary steps towards merging models trained on video and electrodermal activity (EDA) features for automated pain detection in children. The ultimate aim is to demonstrate the benefits of fusing models for improved accuracy in pain detection, particularly in children where accurate determination of pain levels can be challenging.

Both facial activity and EDA provide rich sources of information about pain levels in children. The study discusses the use of both video features and EDA features in the fusion of models for pain detection. The fusion of these models is expected to provide improved accuracy compared to using EDA and video features alone.

The study focuses on accurately determining pain levels in children, even in cases where it is challenging for trained professionals and parents. It involves a special test case involving domain adaptation, specifically in the context of automated pain detection in children. The goal is to show that the fusion of models can lead to improved accuracy in pain detection, even in cases of domain adaptation.

Multimodal approaches combining facial expression analysis from video with physiological signals such as EDA leverage complementary information to more accurately recognize pain states. For instance, a system integrating facial expression (via ResNet-50) and speech (via VGGish with LSTM and attention) reached accuracies of 80-85% on multimodal pain datasets and generalized well to different pain grades, demonstrating robustness in cross-dataset testing and transfer learning contexts.

Similarly, datasets that include synchronized video and EDA recordings enable multimodal modeling, which enhances pain assessment by capturing both behavioral and autonomic responses. Using fusion methods (such as weighted-sum fusion or attention mechanisms) to integrate these modalities yields improved discrimination of pain intensity and better domain adaptation because it reduces reliance on any single source that may vary across contexts.

While unimodal models can perform well individually, multimodal fusion generally outperforms them by addressing their individual limitations and providing richer representations of the pain experience. In summary, the fusion of video and EDA in automated pain detection systems enhances accuracy and robustness, particularly under domain adaptation scenarios, by combining complementary behavioral and physiological signals that each have independent predictive value but perform best jointly.

References: [1] [Paper Reference 1] [3] [Paper Reference 3]

- The realm of health-and-wellness, and specifically pain detection, could be revolutionized by advancements in science, such as the fusion of video and EDA models, which leverages the power of artificial intelligence and technology.

- In the field of fitness-and-exercise, artificial intelligence through eye tracking technology may aid in performance analysis and improve mental health outcomes by identifying physical and emotional stress levels during workouts.

- Beyond medicine and sports, the art of painting could also benefit from artificial intelligence applications, as eye-tracking technology could help researchers study the emotional response of viewers to different artworks, potentially providing insights into mental health assessments.