Health AI needs supervision, yet the importance of algorithms demands equal attention according to researchers.

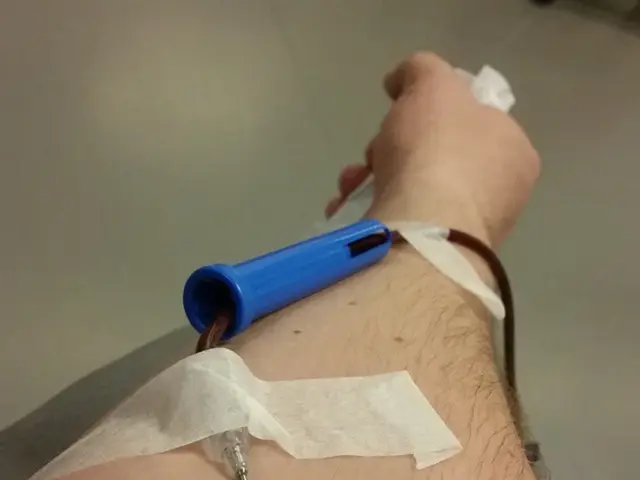

In the realm of healthcare, the duty of a physician extends beyond mere care; it involves constant assessment, wondering about the chances of success, potential risks, and when to re-test. As artificial intelligence (AI) enters the clinical sector, it aims to reduce risk and prioritize high-risk patients. However, researchers from MIT, Equality AI, and Boston University are advocating for increased oversight of AI by regulatory bodies, following a new rule issued by the U.S. Office for Civil Rights (OCR) under the Affordable Care Act (ACA).

This rule, published in the New England Journal of Medicine AI's (NEJM AI) October issue, prohibits discrimination based on race, color, national origin, age, disability, or sex in "patient care decision support tools." This term encompasses both AI and non-automated tools used in medicine.

This rule, a response to President Joe Biden's Executive Order on Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence, aims to advance health equity. Marzyeh Ghassemi, an associate professor at MIT, views it as a significant step forward, encouraging equity-driven improvements to existing non-AI algorithms and clinical decision-support tools.

The number of Food and Drug Administration (FDA)-approved, AI-enabled devices has skyrocketed since the approval of the first AI-enabled device in 1995. As of October, the FDA has approved close to 1,000 such devices, many designed to support clinical decision-making. However, these clinical risk scores produced by these tools lack oversight, despite their widespread use by U.S. physicians.

To address this gap, the MIT Abdul Latif Jameel Clinic for Machine Learning in Health will host another regulatory conference in March 2025. Last year's conference spurred debates among faculty, regulators, and industry experts on the regulation of AI in health. Isaac Kohane, chair of the Department of Biomedical Informatics at Harvard Medical School, believes even these simple risk scores should be held to the same standards as complex AI algorithms.

Moreover, while many decision-support tools do not use AI, they are equally responsible for perpetuating biases in healthcare and necessitate oversight. Regulating clinical risk scores presents significant challenges due to their widespread use in electronic medical records. Co-author Maia Hightower of Equality AI emphasizes the need for transparency and nondiscrimination, but notes that under the incoming administration, regulation may prove particularly challenging due to its focus on deregulation and opposition to certain nondiscrimination policies.

The current regulatory landscape for clinical risk scores and decision-support tools, particularly AI-enabled devices, is evolving and complex, with both federal and state-level initiatives underway. Some states have enacted AI-related bills, while the U.S. Congress is considering a provision for a 10-year moratorium on the enforcement of state and local laws regulating AI systems. The outcome of these proposed federal and state-level regulations will significantly influence the future landscape of AI in U.S. healthcare.

- In the medical-conditions domain, AI's entry into the clinical sector is aimed at minimizing risk and prioritizing high-risk patients.

- Researchers from MIT, Equality AI, and Boston University advocate for increased oversight of AI by regulatory bodies, following a rule published in the New England Journal of Medicine AI that prohibits discrimination in patient care decision support tools.

- This rule, a response to President Joe Biden's Executive Order, aims to advance health equity and encourages equity-driven improvements to existing AI and non-AI algorithms.

- The number of FDA-approved, AI-enabled devices has surged, with close to 1,000 such devices approved as of October. However, the clinical risk scores produced by these tools lack oversight.

- Isaac Kohane, chair of the Department of Biomedical Informatics at Harvard Medical School, believes even these simple risk scores should be held to the same standards as complex AI algorithms.

- In addition to AI-enabled devices, traditional decision-support tools are equally responsible for perpetuating biases in healthcare and require oversight.

- The future landscape of AI in U.S. healthcare will be significantly influenced by the outcome of proposed federal and state-level regulations currently underway, such as a 10-year moratorium on enforcing state and local laws regulating AI systems.