Individual seeks health guidance from ChatGPT, ends up hospitalized due to poisoning and psychosis

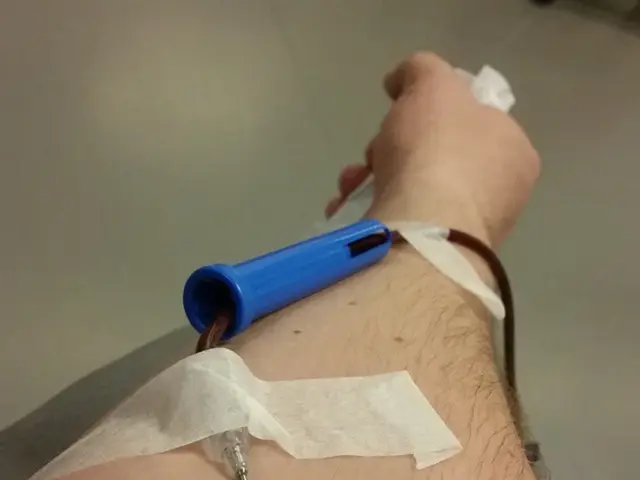

In a recent case reported in the journal Annals of Internal Medicine: Clinical Cases, a 60-year-old man was hospitalized for three weeks after consuming sodium bromide, a substance suggested by the AI model ChatGPT as a salt substitute. The incident has raised concerns about the potential adverse health outcomes from relying on AI for medical advice.

The man, who experienced auditory and visual hallucinations, was eventually placed on an involuntary psychiatric hold due to his erratic behaviour. After three weeks of hospitalization, he was discharged, but he continued to face long-term effects, including paranoia and coordination issues.

Sodium bromide is an inorganic compound that resembles table salt but can cause headaches, dizziness, and psychosis. It is used in agriculture and as a fire suppressant, according to the US Centers for Disease Control. However, there are no known cures for bromine poisoning, and survivors often face long-term effects.

The incident has prompted researchers to flag potential risks associated with AI-generated medical advice. One of the key concerns is the promulgation of decontextualized information. ChatGPT, like many AI models, relies on statistical patterns rather than verified medical knowledge, which can lead to inaccurate or misleading advice.

Another concern is automation bias and over-trust in AI. People may trust AI-generated medical advice as much as or more than that of healthcare professionals, which could lead them to follow unsafe recommendations without critical evaluation or professional consultation. This overconfidence poses a risk to patient safety.

It's important to note that ChatGPT is not a licensed healthcare provider and cannot integrate patient-specific factors such as medical history, severity, or personal needs. It may omit critical details like urgency of care and appropriate management protocols, leading to incomplete or unsafe guidance.

Moreover, ChatGPT is not HIPAA compliant, and using it to handle protected health information raises legal and privacy concerns. It also lacks integration with electronic health records, which might cause errors when manually transferring AI-generated content into medical charts.

The sodium bromide poisoning case underscores the potential harm from AI-generated medical advice when users rely on it uncritically without professional oversight. This incident reinforces the need for cautious use and verifying AI outputs with qualified healthcare providers.

In conclusion, the primary adverse outcomes relate to patient harm caused by incorrect or incomplete information, over-trust leading to following unsafe advice, and the inability of AI tools like ChatGPT to replace human clinical expertise and judgment in healthcare. It is crucial to approach AI-generated medical advice with scepticism and seek professional guidance when in doubt.

- Technology, in the form of AI models like ChatGPT, can potentially offer solutions in various fields, but concerns about its accuracy and safety in the realm of health and wellness have been raised.

- In a culture that increasingly relies on technology for advice, the potential risks associated with AI-generated medical advice, such as misinformation or incomplete guidance, warrant careful consideration.

- Agriculture and science have employed technology like sodium bromide for different purposes, yet its use can have unforeseen consequences, as demonstrated by its adverse health effects when consumed by dieters.

- The health and mental health of individuals can be significantly impacted by relying on an AI model like ChatGPT for medical advice, leading to erratic behavior, long-term effects, or even a voluntary or involuntary psychiatric hold.

- Therapies and treatments in the health industry rely on a deep understanding of individual patient needs and factors, areas where AI models currently lack the ability to integrate and provide personalized care, making them unsuitable as a replacement for human clinical expertise.