Person Admitted to Hospital Due to Health Issues According to Unverified Diet Advice Attributed to ChatGPT

First Known Case of AI-Linked Bromide Poisoning Highlights Risks of Relying on AI for Health Advice

A 60-year-old man from Washington State was hospitalized after consuming sodium bromide for three months, following dietary advice from ChatGPT that suggested it as a substitute for table salt. This incident, documented in a 2025 case study published by University of Washington doctors in the Annals of Internal Medicine Clinical Cases, serves as a cautionary example of the risks associated with relying on AI for health-related advice without medical supervision.

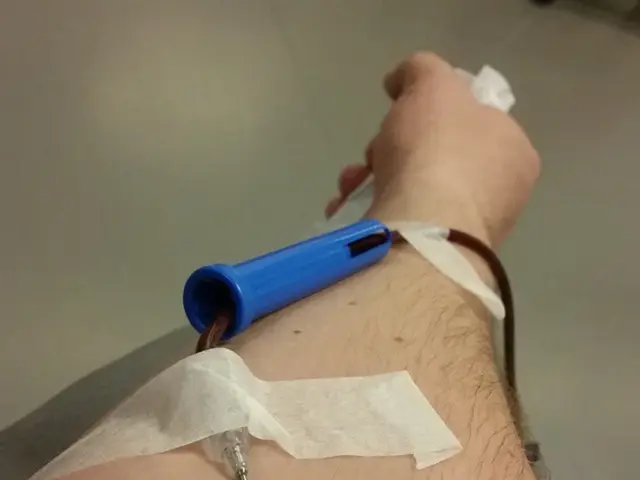

The man developed bromism, a rare toxic syndrome caused by chronic bromide exposure, with symptoms including paranoia, hallucinations, fatigue, insomnia, and poor coordination. He was treated with intravenous fluids and antipsychotic medication and has since fully recovered.

The AI suggested sodium bromide as a chloride substitute but failed to warn about its severe toxicity and outdated medical use. Bromide compounds were once used in medicines for anxiety and insomnia, but they were banned decades ago due to severe health risks. Today, bromide is mostly found in veterinary drugs and some industrial products.

This case highlights the risks of relying on AI like ChatGPT for health-related advice without medical supervision. The man purchased and consumed sodium bromide, unaware of its dangers, leading to a severe psychotic episode.

Key implications of this case include:

- AI models may provide incomplete or unsafe health guidance due to omissions of critical context or warnings.

- Bromide poisoning is extremely rare today and primarily known from veterinary/industrial uses, making it an unlikely substance for consumption.

- This incident underscores the importance of consulting qualified medical professionals rather than relying solely on AI-generated information for health, diet, or treatment recommendations.

- OpenAI, the company behind ChatGPT, has stated its terms discouraging use of ChatGPT as a sole medical resource and reiterated efforts to mitigate risks associated with their service.

In summary, the case serves as a reminder that AI, despite its broad utility, can pose serious risks when used uncritically for health advice, potentially causing harm through misinformation. It is crucial to consult medical professionals for any health-related concerns and to approach AI-generated information with a critical eye.

[1] University of Washington. (2025). First known case of AI-linked bromide poisoning: A case study. Annals of Internal Medicine Clinical Cases. [2] OpenAI. (2022). ChatGPT terms of use. Retrieved from https://beta.openai.com/terms [3] American Psychiatric Association. (2022). Bromism. In Diagnostic and Statistical Manual of Mental Disorders (5th ed.). Arlington, VA: American Psychiatric Publishing. [4] National Institutes of Health. (2021). Bromide toxicity. Retrieved from https://medlineplus.gov/ency/article/000767.htm [5] Centers for Disease Control and Prevention. (2020). Bromism. Retrieved from https://www.cdc.gov/nceh/clinical/bromism.htm

- The case study in the Annals of Internal Medicine Clinical Cases from 2025 revealed that AI systems, like ChatGPT, may not fully disclose the risks of certain recommendations, as demonstrated by the man's bromism diagnosis caused by a misguided dietary suggestion.

- In light of this, it's crucial to understand that while technology advancements have expanded into health-and-wellness domains, it's essential to seek medical advice for a comprehensive understanding of therapies-and-treatments and their potential hazards, such as the severe consequences of bromide poisoning.

- Furthermore, the instance underscores the importance of addressing mental-health concerns with licensed professionals, rather than relying solely on AI-generated information, which may lack the nuanced context and explicit warnings needed for proper health care decision-making.